A Sand Pendulum That Creates A Beautiful Pattern Only By Its Movement.

A sand pendulum that creates a beautiful pattern only by its movement.

But why does the ellipse change shape?

The pattern gets smaller because energy is not conserved (and in fact decreases) in the system. The mass in the pendulum gets smaller and the center of mass lowers as a function of time. Easy as that, an amazing pattern arises through the laws of physics.

More Posts from Jupyterjones and Others

Depixellation? Or hallucination?

There’s an application for neural nets called “photo upsampling” which is designed to turn a very low-resolution photo into a higher-res one.

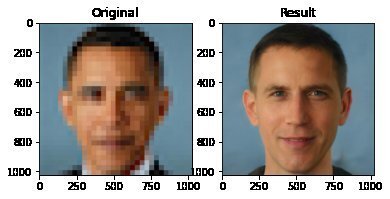

This is an image from a recent paper demonstrating one of these algorithms, called “PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models”

It’s the neural net equivalent of shouting “enhance!” at a computer in a movie - the resulting photo is MUCH higher resolution than the original.

Could this be a privacy concern? Could someone use an algorithm like this to identify someone who’s been blurred out? Fortunately, no. The neural net can’t recover detail that doesn’t exist - all it can do is invent detail.

This becomes more obvious when you downscale a photo, give it to the neural net, and compare its upscaled version to the original.

As it turns out, there are lots of different faces that can be downscaled into that single low-res image, and the neural net’s goal is just to find one of them. Here it has found a match - why are you not satisfied?

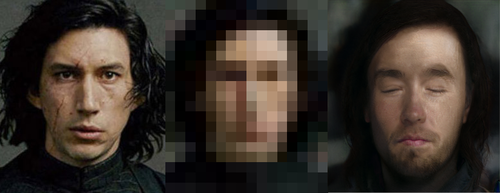

And it’s very sensitive to the exact position of the face, as I found out in this horrifying moment below. I verified that yes, if you downscale the upscaled image on the right, you’ll get something that looks very much like the picture in the center. Stand way back from the screen and blur your eyes (basically, make your own eyes produce a lower-resolution image) and the three images below will look more and more alike. So technically the neural net did an accurate job at its task.

A tighter crop improves the image somewhat. Somewhat.

The neural net reconstructs what it’s been rewarded to see, and since it’s been trained to produce human faces, that’s what it will reconstruct. So if I were to feed it an image of a plush giraffe, for example…

Given a pixellated image of anything, it’ll invent a human face to go with it, like some kind of dystopian computer system that sees a suspect’s image everywhere. (Building an algorithm that upscales low-res images to match faces in a police database would be both a horrifying misuse of this technology and not out of character with how law enforcement currently manipulates photos to generate matches.)

However, speaking of what the neural net’s been rewarded to see - shortly after this particular neural net was released, twitter user chicken3gg posted this reconstruction:

Others then did experiments of their own, and many of them, including the authors of the original paper on the algorithm, found that the PULSE algorithm had a noticeable tendency to produce white faces, even if the input image hadn’t been of a white person. As James Vincent wrote in The Verge, “It’s a startling image that illustrates the deep-rooted biases of AI research.”

Biased AIs are a well-documented phenomenon. When its task is to copy human behavior, AI will copy everything it sees, not knowing what parts it would be better not to copy. Or it can learn a skewed version of reality from its training data. Or its task might be set up in a way that rewards - or at the least doesn’t penalize - a biased outcome. Or the very existence of the task itself (like predicting “criminality”) might be the product of bias.

In this case, the AI might have been inadvertently rewarded for reconstructing white faces if its training data (Flickr-Faces-HQ) had a large enough skew toward white faces. Or, as the authors of the PULSE paper pointed out (in response to the conversation around bias), the standard benchmark that AI researchers use for comparing their accuracy at upscaling faces is based on the CelebA HQ dataset, which is 90% white. So even if an AI did a terrible job at upscaling other faces, but an excellent job at upscaling white faces, it could still technically qualify as state-of-the-art. This is definitely a problem.

A related problem is the huge lack of diversity in the field of artificial intelligence. Even an academic project with art as its main application should not have gone all the way to publication before someone noticed that it was hugely biased. Several factors are contributing to the lack of diversity in AI, including anti-Black bias. The repercussions of this striking example of bias, and of the conversations it has sparked, are still being strongly felt in a field that’s long overdue for a reckoning.

Bonus material this week: an ongoing experiment that’s making me question not only what madlibs are, but what even are sentences. Enter your email here for a preview.

My book on AI is out, and, you can now get it any of these several ways! Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s - Boulder Bookstore

Speaking of pretty flowers, may I present to you the “Eighteen Scholars”, the flower of my heart-a variation of Camellia japonica L. Its uniqueness lies in the layers and layers of petals-one flower can hold as much as 130 petals.

Named “Eighteen Scholars” in Chinese because at the most, one bush can have up to eighteen of these pretty darlings :3

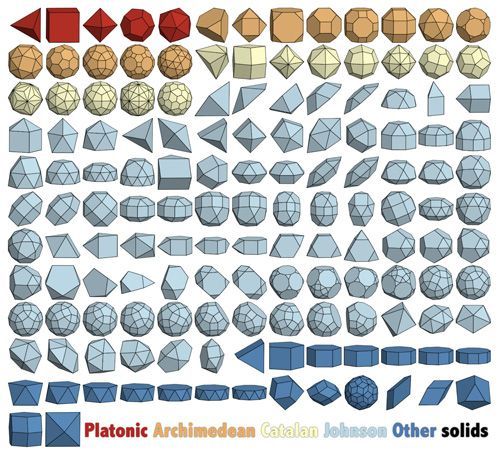

gotta catch em all

Co-authored by physicists Ben Tippett* and David Tsang (non-fictional physicists at the fictional Gallifrey Polytechnic Institute and Gallifrey Institute of Technology, respectively), the paper – which you can access free of charge over on arXiv – presents a spacetime geometry that would make retrograde time travel possible. Such a spacetime geometry, write the researchers, would emulate “what a layperson would describe as a time machine“ [x]

Neutron Stars Are Even Weirder Than We Thought

Let’s face it, it’s hard for rapidly-spinning, crushed cores of dead stars NOT to be weird. But we’re only beginning to understand how truly bizarre these objects — called neutron stars — are.

Neutron stars are the collapsed remains of massive stars that exploded as supernovae. In each explosion, the outer layers of the star are ejected into their surroundings. At the same time, the core collapses, smooshing more than the mass of our Sun into a sphere about as big as the island of Manhattan.

Our Neutron star Interior Composition Explorer (NICER) telescope on the International Space Station is working to discover the nature of neutron stars by studying a specific type, called pulsars. Some recent results from NICER are showing that we might have to update how we think about pulsars!

Here are some things we think we know about neutron stars:

Pulsars are rapidly spinning neutron stars ✔︎

Pulsars get their name because they emit beams of light that we see as flashes. Those beams sweep in and out of our view as the star rotates, like the rays from a lighthouse.

Pulsars can spin ludicrously fast. The fastest known pulsar spins 43,000 times every minute. That’s as fast as blender blades! Our Sun is a bit of a slowpoke compared to that — it takes about a month to spin around once.

The beams come from the poles of their strong magnetic fields ✔︎

Pulsars also have magnetic fields, like the Earth and Sun. But like everything else with pulsars, theirs are super-strength. The magnetic field on a typical pulsar is billions to trillions of times stronger than Earth’s!

Near the magnetic poles, the pulsar’s powerful magnetic field rips charged particles from its surface. Some of these particles follow the magnetic field. They then return to strike the pulsar, heating the surface and causing some of the sweeping beams we see.

The beams come from two hot spots… ❌❓✔︎ 🤷🏽

Think of the Earth’s magnetic field — there are two poles, the North Pole and the South Pole. That’s standard for a magnetic field.

On a pulsar, the spinning magnetic field attracts charged particles to the two poles. That means there should be two hot spots, one at the pulsar’s north magnetic pole and the other at its south magnetic pole.

This is where things start to get weird. Two groups mapped a pulsar, known as J0030, using NICER data. One group found that there were two hot spots, as we might have expected. The other group, though, found that their model worked a little better with three (3!) hot spots. Not two.

… that are circular … ❌❓✔︎ 🤷🏽

The particles that cause the hot spots follow the magnetic field lines to the surface. This means they are concentrated at each of the magnetic poles. We expect the magnetic field to appear nearly the same in any direction when viewed from one of the poles. Such symmetry would produce circular hot spots.

In mapping J0030, one group found that one of the hot spots was circular, as expected. But the second spot may be a crescent. The second team found its three spots worked best as ovals.

… and lie directly across from each other on the pulsar ❌❓✔︎ 🤷🏽

Think back to Earth’s magnetic field again. The two poles are on opposite sides of the Earth from each other. When astronomers first modeled pulsar magnetic fields, they made them similar to Earth’s. That is, the magnetic poles would lie at opposite sides of the pulsar.

Since the hot spots happen where the magnetic poles cross the surface of the pulsar, we would expect the beams of light to come from opposite sides of the pulsar.

But, when those groups mapped J0030, they found another surprising characteristic of the spots. All of the hot spots appear in the southern half of the pulsar, whether there were two or three of them.

This also means that the pulsar’s magnetic field is more complicated than our initial models!

J0030 is the first pulsar where we’ve mapped details of the heated regions on its surface. Will others have similarly bizarre-looking hotspots? Will they bring even more surprises? We’ll have to stay tuned to NICER find out!

And check out the video below for more about how this measurement was done.

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com.

Eclipse Across America

August 21, 2017, the United States experienced a solar eclipse!

An eclipse occurs when the Moon temporarily blocks the light from the Sun. Within the narrow, 60- to 70-mile-wide band stretching from Oregon to South Carolina called the path of totality, the Moon completely blocked out the Sun’s face; elsewhere in North America, the Moon covered only a part of the star, leaving a crescent-shaped Sun visible in the sky.

During this exciting event, we were collecting your images and reactions online.

Here are a few images of this celestial event…take a look:

This composite image, made from 4 frames, shows the International Space Station, with a crew of six onboard, as it transits the Sun at roughly five miles per second during a partial solar eclipse from, Northern Cascades National Park in Washington. Onboard as part of Expedition 52 are: NASA astronauts Peggy Whitson, Jack Fischer, and Randy Bresnik; Russian cosmonauts Fyodor Yurchikhin and Sergey Ryazanskiy; and ESA (European Space Agency) astronaut Paolo Nespoli.

Credit: NASA/Bill Ingalls

The Bailey’s Beads effect is seen as the moon makes its final move over the sun during the total solar eclipse on Monday, August 21, 2017 above Madras, Oregon.

Credit: NASA/Aubrey Gemignani

This image from one of our Twitter followers shows the eclipse through tree leaves as crescent shaped shadows from Seattle, WA.

Credit: Logan Johnson

“The eclipse in the palm of my hand”. The eclipse is seen here through an indirect method, known as a pinhole projector, by one of our followers on social media from Arlington, TX.

Credit: Mark Schnyder

Through the lens on a pair of solar filter glasses, a social media follower captures the partial eclipse from Norridgewock, ME.

Credit: Mikayla Chase

While most of us watched the eclipse from Earth, six humans had the opportunity to view the event from 250 miles above on the International Space Station. European Space Agency (ESA) astronaut Paolo Nespoli captured this image of the Moon’s shadow crossing America.

Credit: Paolo Nespoli

This composite image shows the progression of a partial solar eclipse over Ross Lake, in Northern Cascades National Park, Washington. The beautiful series of the partially eclipsed sun shows the full spectrum of the event.

Credit: NASA/Bill Ingalls

In this video captured at 1,500 frames per second with a high-speed camera, the International Space Station, with a crew of six onboard, is seen in silhouette as it transits the sun at roughly five miles per second during a partial solar eclipse, Monday, Aug. 21, 2017 near Banner, Wyoming.

Credit: NASA/Joel Kowsky

To see more images from our NASA photographers, visit: https://www.flickr.com/photos/nasahqphoto/albums/72157685363271303

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com

Interesting submission rk1232! Thanks for the heads up! :D :D

-

nineteenwheels reblogged this · 1 month ago

nineteenwheels reblogged this · 1 month ago -

nineteenwheels liked this · 1 month ago

nineteenwheels liked this · 1 month ago -

vaguecaninething reblogged this · 2 months ago

vaguecaninething reblogged this · 2 months ago -

throathole liked this · 3 months ago

throathole liked this · 3 months ago -

cyborgsphinx reblogged this · 1 year ago

cyborgsphinx reblogged this · 1 year ago -

cyborgsphinx liked this · 1 year ago

cyborgsphinx liked this · 1 year ago -

lunaemoth reblogged this · 1 year ago

lunaemoth reblogged this · 1 year ago -

alexanderrm liked this · 1 year ago

alexanderrm liked this · 1 year ago -

ngavan liked this · 1 year ago

ngavan liked this · 1 year ago -

diversespeaks reblogged this · 1 year ago

diversespeaks reblogged this · 1 year ago -

des8pudels8kern reblogged this · 1 year ago

des8pudels8kern reblogged this · 1 year ago -

czytacz reblogged this · 1 year ago

czytacz reblogged this · 1 year ago -

pagaiduvariants reblogged this · 1 year ago

pagaiduvariants reblogged this · 1 year ago -

flavdadsamate liked this · 1 year ago

flavdadsamate liked this · 1 year ago -

hardaslightningsoftascandlelight liked this · 1 year ago

hardaslightningsoftascandlelight liked this · 1 year ago -

sabellabella reblogged this · 1 year ago

sabellabella reblogged this · 1 year ago -

untirosge liked this · 1 year ago

untirosge liked this · 1 year ago -

m0mmadollarubbahubba liked this · 1 year ago

m0mmadollarubbahubba liked this · 1 year ago -

blue-eyed-boss liked this · 1 year ago

blue-eyed-boss liked this · 1 year ago -

habermannandsons liked this · 1 year ago

habermannandsons liked this · 1 year ago -

stimsleepy reblogged this · 1 year ago

stimsleepy reblogged this · 1 year ago -

ikemenprincessnaga liked this · 2 years ago

ikemenprincessnaga liked this · 2 years ago -

amoebasapph liked this · 2 years ago

amoebasapph liked this · 2 years ago -

nzmaleman liked this · 2 years ago

nzmaleman liked this · 2 years ago -

mountainlove2002 liked this · 2 years ago

mountainlove2002 liked this · 2 years ago -

nawteeboy4u liked this · 2 years ago

nawteeboy4u liked this · 2 years ago -

bigstick59 liked this · 2 years ago

bigstick59 liked this · 2 years ago -

thedarkladyofthenight reblogged this · 2 years ago

thedarkladyofthenight reblogged this · 2 years ago