Regarding Fractals And Non-Integral Dimensionality

Regarding Fractals and Non-Integral Dimensionality

Alright, I know it’s past midnight (at least it is where I am), but let’s talk about fractal geometry.

Fractals

If you don’t know what fractals are, they’re essentially just any shape that gets rougher (or has more detail) as you zoom in, rather than getting smoother. Non-fractals include easy geometric shapes like squares, circles, and triangles, while fractals include more complex or natural shapes like the coast of Great Britain, Sierpinski’s Triangle, or a Koch Snowflake.

Fractals, in turn, can be broken down further. Some fractals are the product of an iterative process and repeat smaller versions of themselves throughout them. Others are more natural and just happen to be more jagged.

Fractals and Non-Integral Dimensionality

Now that we’ve gotten the actual explanation of what fractals are out of the way, let’s talk about their most interesting property: non-integral dimensionality. The idea that fractals do not actually have an integral dimension was originally thought up by this guy, Benoit Mandelbrot.

He studied fractals a lot, even finding one of his own: the Mandelbrot Set. The important thing about this guy is that he realized that fractals are interesting when it comes to defining their dimension. Most regular shapes can have their dimension found easily: lines with their finite length but no width or height; squares with their finite length and width but no height; and cubes with their finite length, width, and height. Take note that each dimension has its own measure. The deal with many fractals is that they can’t be measured very easily at all using these terms. Take Sierpinski’s triangle as an example.

Is this shape one- or two-dimensional? Many would say two-dimensional from first glance, but the same shape can be created using a line rather than a triangle.

So now it seems a bit more tricky. Is it one-dimensional since it can be made out of a line, or is it two-dimensional since it can be made out of a triangle? The answer is neither. The problem is that, if we were to treat it like a two-dimensional object, the measure of its dimension (area) would be zero. This is because we’ve technically taken away all of its area by taking out smaller and smaller triangles in every available space. On the other hand, if we were to treat it like a one-dimensional object, the measure of its dimension (length) would be infinity. This is because the line keeps getting longer and longer to stretch around each and every hole, of which there are an infinite number. So now we run into a problem: if it’s neither one- nor two-dimensional, then what is its dimensionality? To find out, we can use non-fractals

Measuring Integral Dimensions and Applying to Fractals

Let’s start with a one-dimensional line. The measure for a one-dimensional object is length. If we were to scale the line down by one-half, what is the fraction of the new length compared to the original length?

The new length of each line is one-half the original length.

Now let’s try the same thing for squares. The measure for a two-dimensional object is area. If we were to scale down a square by one-half (that is to say, if we were to divide the square’s length in half and divide its width in half), what is the fraction of the new area compared to the original area?

The new area of each square is one-quarter the original area.

If we were to try the same with cubes, the volume of each new cube would be one-eighth the original volume of a cube. These fractions provide us with a pattern we can work with.

In one dimension, the new length (one-half) is equal to the scaling factor (one-half) put to the first power (given by it being one-dimensional).

In two dimensions, the new area (one-quarter) is equal to the scaling factor (one-half) put to the second power (given by it being two-dimensional).

In three dimensions, the same pattern follows suit, in which the new volume (one-eighth) is equivalent to the scaling factor (one-half) put to the third power.

We can infer from this trend that the dimension of an object could be (not is) defined as the exponent fixed to the scaling factor of an object that determines the new measure of the object. To put it in mathematical terms:

Examples of this equation would include the one-dimensional line, the two-dimensional square, and the three-dimensional cube:

½ = ½^1

¼ = ½^2

1/8 = ½^3

Now this equation can be used to define the dimensionality of a given fractal. Let’s try Sierpinski’s Triangle again.

Here we can see that the triangle as a whole is made from three smaller versions of itself, each of which is scaled down by half of the original (this is proven by each side of the smaller triangles being half the length of the side of the whole triangle). So now we can just plug in the numbers to our equation and leave the dimension slot blank.

1/3 = ½^D

To solve for D, we need to know what power ½ must be put to in order to get 1/3. To do this, we can use logarithms (quick note: in this case, we can replace ½ with 2 and 1/3 with 3).

log_2(3) = roughly 1.585

So we can conclude that Sierpinski’s triangle is 1.585-dimensional. Now we can repeat this process with many other fractals. For example, this Sierpinski-esque square:

It’s made up of eight smaller versions of itself, each of which is scaled down by one-third. Plugging this into the equation, we get

1/8 = 1/3^D

log_3(8) = roughly 1.893

So we can conclude that this square fractal is 1.893-dimensional.

We can do this on this cubic version of it, too:

This cube is made up of 20 smaller versions of itself, each of which is scaled down by 1/3.

1/20 = 1/3^D

log_3(20) = roughly 2.727

So we can conclude that this fractal is 2.727-dimensional.

More Posts from Jupyterjones and Others

Depixellation? Or hallucination?

There’s an application for neural nets called “photo upsampling” which is designed to turn a very low-resolution photo into a higher-res one.

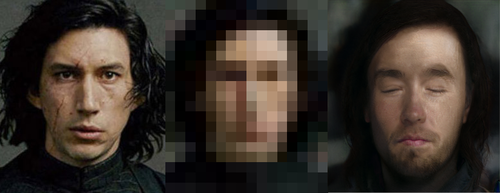

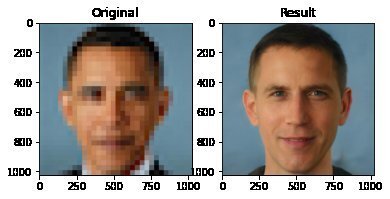

This is an image from a recent paper demonstrating one of these algorithms, called “PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models”

It’s the neural net equivalent of shouting “enhance!” at a computer in a movie - the resulting photo is MUCH higher resolution than the original.

Could this be a privacy concern? Could someone use an algorithm like this to identify someone who’s been blurred out? Fortunately, no. The neural net can’t recover detail that doesn’t exist - all it can do is invent detail.

This becomes more obvious when you downscale a photo, give it to the neural net, and compare its upscaled version to the original.

As it turns out, there are lots of different faces that can be downscaled into that single low-res image, and the neural net’s goal is just to find one of them. Here it has found a match - why are you not satisfied?

And it’s very sensitive to the exact position of the face, as I found out in this horrifying moment below. I verified that yes, if you downscale the upscaled image on the right, you’ll get something that looks very much like the picture in the center. Stand way back from the screen and blur your eyes (basically, make your own eyes produce a lower-resolution image) and the three images below will look more and more alike. So technically the neural net did an accurate job at its task.

A tighter crop improves the image somewhat. Somewhat.

The neural net reconstructs what it’s been rewarded to see, and since it’s been trained to produce human faces, that’s what it will reconstruct. So if I were to feed it an image of a plush giraffe, for example…

Given a pixellated image of anything, it’ll invent a human face to go with it, like some kind of dystopian computer system that sees a suspect’s image everywhere. (Building an algorithm that upscales low-res images to match faces in a police database would be both a horrifying misuse of this technology and not out of character with how law enforcement currently manipulates photos to generate matches.)

However, speaking of what the neural net’s been rewarded to see - shortly after this particular neural net was released, twitter user chicken3gg posted this reconstruction:

Others then did experiments of their own, and many of them, including the authors of the original paper on the algorithm, found that the PULSE algorithm had a noticeable tendency to produce white faces, even if the input image hadn’t been of a white person. As James Vincent wrote in The Verge, “It’s a startling image that illustrates the deep-rooted biases of AI research.”

Biased AIs are a well-documented phenomenon. When its task is to copy human behavior, AI will copy everything it sees, not knowing what parts it would be better not to copy. Or it can learn a skewed version of reality from its training data. Or its task might be set up in a way that rewards - or at the least doesn’t penalize - a biased outcome. Or the very existence of the task itself (like predicting “criminality”) might be the product of bias.

In this case, the AI might have been inadvertently rewarded for reconstructing white faces if its training data (Flickr-Faces-HQ) had a large enough skew toward white faces. Or, as the authors of the PULSE paper pointed out (in response to the conversation around bias), the standard benchmark that AI researchers use for comparing their accuracy at upscaling faces is based on the CelebA HQ dataset, which is 90% white. So even if an AI did a terrible job at upscaling other faces, but an excellent job at upscaling white faces, it could still technically qualify as state-of-the-art. This is definitely a problem.

A related problem is the huge lack of diversity in the field of artificial intelligence. Even an academic project with art as its main application should not have gone all the way to publication before someone noticed that it was hugely biased. Several factors are contributing to the lack of diversity in AI, including anti-Black bias. The repercussions of this striking example of bias, and of the conversations it has sparked, are still being strongly felt in a field that’s long overdue for a reckoning.

Bonus material this week: an ongoing experiment that’s making me question not only what madlibs are, but what even are sentences. Enter your email here for a preview.

My book on AI is out, and, you can now get it any of these several ways! Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s - Boulder Bookstore

#beingacomputerprogrammingmajor : Testing out html scripts on Pokemon Go and finding out it actually works.

Note to self: debugging

always remember to remove the Log.d(”Well fuck you too”) lines and the variables named “wtf” and “whhhyyyyyyy” before pushing anything to git

Calculating the surface area of a sphere. Found on Imgur.

A sand pendulum that creates a beautiful pattern only by its movement.

But why does the ellipse change shape?

The pattern gets smaller because energy is not conserved (and in fact decreases) in the system. The mass in the pendulum gets smaller and the center of mass lowers as a function of time. Easy as that, an amazing pattern arises through the laws of physics.

Permutations

Figuring out how to arrange things is pretty important.

Like, if we have the letters {A,B,C}, the six ways to arrange them are: ABC ACB BAC BCA CAB CBA

And we can say more interesting things about them (e.g. Combinatorics) another great extension is when we get dynamic

Like, if we go from ABC to ACB, and back…

We can abstract away from needing to use individual letters, and say these are both “switching the 2nd and 3rd elements,” and it is the same thing both times.

Each of these switches can be more complicated than that, like going from ABCDE to EDACB is really just 1->3->4->2->5->1, and we can do it 5 times and cycle back to the start

We can also have two switches happening at once, like 1->2->3->1 and 4->5->4, and this cycles through 6 times to get to the start.

Then, let’s extend this a bit further.

First, let’s first get a better notation, and use (1 2 3) for what I called 1->2->3->1 before.

Let’s show how we can turn these permutations into a group.

Then, let’s say the identity is just keeping things the same, and call it id.

And, this repeating thing can be extended into making the group combiner: doing one permutation and then the other. For various historical reasons, the combination of permutation A and then permutation B is B·A.

This is closed, because permuting all the things and then permuting them again still keeps 1 of all the elements in an order.

Inverses exist, because you just need to put everything from the new position into the old position to reverse it.

Associativity will be left as an exercise to the reader (read: I don’t want to prove it)

-

nochunkspaghetti liked this · 5 months ago

nochunkspaghetti liked this · 5 months ago -

f4ll4d0 liked this · 2 years ago

f4ll4d0 liked this · 2 years ago -

totallynot-suspicious reblogged this · 3 years ago

totallynot-suspicious reblogged this · 3 years ago -

thewordlessthinker liked this · 3 years ago

thewordlessthinker liked this · 3 years ago -

frappyflop reblogged this · 3 years ago

frappyflop reblogged this · 3 years ago -

frappyflop liked this · 3 years ago

frappyflop liked this · 3 years ago -

eros-vigilante reblogged this · 3 years ago

eros-vigilante reblogged this · 3 years ago -

eros-vigilante liked this · 3 years ago

eros-vigilante liked this · 3 years ago -

bitchykelp liked this · 4 years ago

bitchykelp liked this · 4 years ago -

kobithedragon liked this · 4 years ago

kobithedragon liked this · 4 years ago -

winterzeiten liked this · 4 years ago

winterzeiten liked this · 4 years ago -

eugenia-virginia reblogged this · 4 years ago

eugenia-virginia reblogged this · 4 years ago -

eugenia-virginia liked this · 4 years ago

eugenia-virginia liked this · 4 years ago -

rosemateria reblogged this · 4 years ago

rosemateria reblogged this · 4 years ago -

makinmuffins liked this · 4 years ago

makinmuffins liked this · 4 years ago -

concupiscience reblogged this · 4 years ago

concupiscience reblogged this · 4 years ago -

suthar-lakshman liked this · 4 years ago

suthar-lakshman liked this · 4 years ago -

spycopoth liked this · 4 years ago

spycopoth liked this · 4 years ago -

hikuricore liked this · 4 years ago

hikuricore liked this · 4 years ago -

randomemories liked this · 4 years ago

randomemories liked this · 4 years ago -

butterfly-on-venus liked this · 4 years ago

butterfly-on-venus liked this · 4 years ago -

geontonic liked this · 4 years ago

geontonic liked this · 4 years ago -

peachpitstop reblogged this · 4 years ago

peachpitstop reblogged this · 4 years ago -

peachpitstop liked this · 4 years ago

peachpitstop liked this · 4 years ago -

scythiconatron liked this · 4 years ago

scythiconatron liked this · 4 years ago -

bulletproofbutbroken liked this · 4 years ago

bulletproofbutbroken liked this · 4 years ago -

sciencecanbesopersonalrlly reblogged this · 4 years ago

sciencecanbesopersonalrlly reblogged this · 4 years ago -

tardigrade666 liked this · 4 years ago

tardigrade666 liked this · 4 years ago -

eclecticcollectorsheep liked this · 4 years ago

eclecticcollectorsheep liked this · 4 years ago -

write-a-name liked this · 4 years ago

write-a-name liked this · 4 years ago -

lifians reblogged this · 4 years ago

lifians reblogged this · 4 years ago -

lifians liked this · 4 years ago

lifians liked this · 4 years ago -

2dsi reblogged this · 4 years ago

2dsi reblogged this · 4 years ago -

2dsi liked this · 5 years ago

2dsi liked this · 5 years ago -

peaucreneau liked this · 5 years ago

peaucreneau liked this · 5 years ago -

isotgab-blog liked this · 5 years ago

isotgab-blog liked this · 5 years ago -

courtpainterofearthempire liked this · 5 years ago

courtpainterofearthempire liked this · 5 years ago -

grammary reblogged this · 5 years ago

grammary reblogged this · 5 years ago